How To Create Adla Linked Service In Adf V2

Past: | Updated: 2019-03-eighteen | Comments (1) | Related: > Azure Data Manufacturing plant

Problem

Equally more than traditional on-premises data warehouses are now being designed, developed and delivered in the cloud, the data integration, transformation, and orchestration layers are being constructed in Azure Data Manufactory, which is Microsoft'south on-bounds SQL Server Integration Services (SSIS) equivalent offering in the Azure Deject platform.

As I begin to go more comfy with writing these data transformation scripts in Azure Data Lake Analytics using the U-SQL language, I am now interested in integrating these transformation scripts in my Azure Data Factory ETL Pipeline. I know that there is a Data Lake Analytics U-SQL action task in the pipeline, notwithstanding when I try to add this U-SQL Task to my pipeline, I am running in to issues and errors configuring the New Azure Information Analytics Linked Service. This article pin-points these bug and offers a footstep to step solution to configuring a New Azure Data Lake Analytics Linked Service using Azure Data Factory.

Solution

Azure Data Factory is a Microsoft Azure Cloud based ETL service offering that has the potential of designing and orchestrating cloud-based data warehouses, data integration and transformation layers. Specifically, the Data Lake Analytics activity, containing the U-SQL Job allows u.s.a. to transform information using custom U-SQL scripts stored in Azure Data Lake. Yet, the configuration of this U-SQL Task might seem like a daunting task since it is non seamless as it should be at the time of this article. This tip is intended to walk through an example of how to configure this U-SQL job in Azure Data Mill to then let us to transform big data with U-SQL scripts orchestrated in Azure Data Manufacturing plant.

Provisioning Azure Resources

Earlier I begin creating the linked service, I volition need to provision a few Azure resources prior to building my Azure Data Factory pipeline that will run a U-SQL script to transform my data and then load information technology to Azure SQL Database. Permit's get started.

Creating a Data Factory

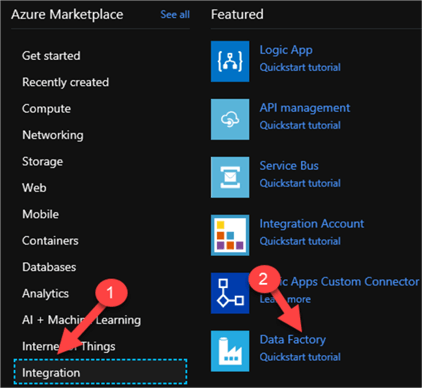

Let'southward start by Creating a Information Factory by Navigating to the Azure Marketplace in the Azure Portal and then clicking Integration > Data Factory.

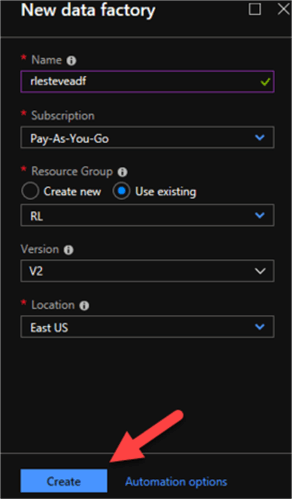

Once I enter the necessary details related to my new data mill, I will click Create.

Creating an Azure Data Lake

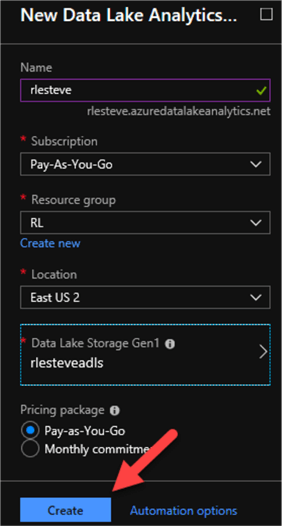

The last resources that I will demand to provision for this process is my Azure Data Lake, which volition consist of an Azure Data Lake Store and an Azure Data Lake Analytics account. Once again, I will navigate to the Azure Marketplace in the Azure Portal, click Analytics > Data Lake Analytics.

Afterwards I enter the following configuration details for the New Information Lake Analytics business relationship, I volition click create.

Creating and Azure Data Lake Analytics Linked Service

To create a new Azure Data Lake Analytics Linked Service, I will launch my Azure Data Manufacturing plant past clicking on the following icon which I have pinned to my dashboard.

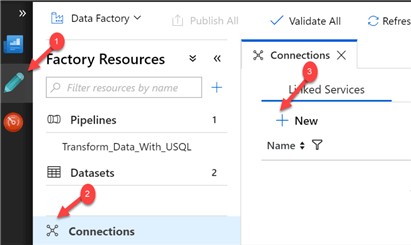

Once my Data Manufactory opens, I will click Author > Connections > New Linked Service equally follows:

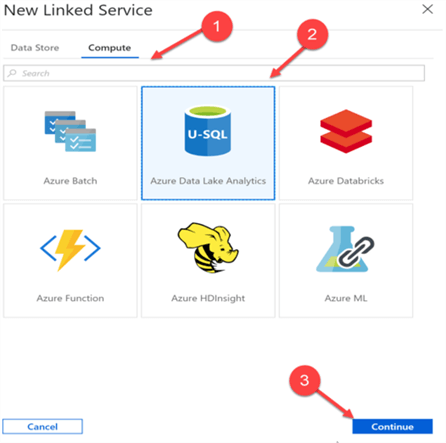

From at that place, I will select Compute > Azure Data Lake Analytics > Keep.

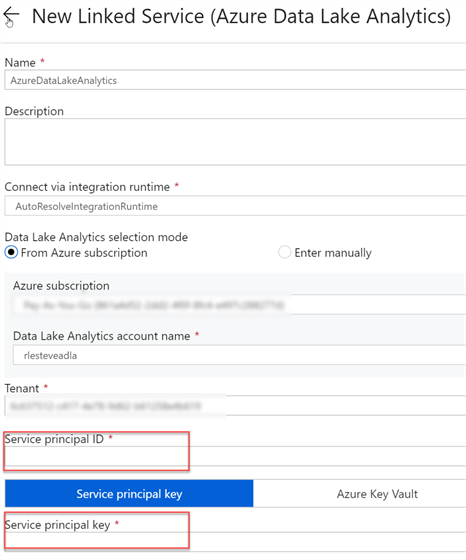

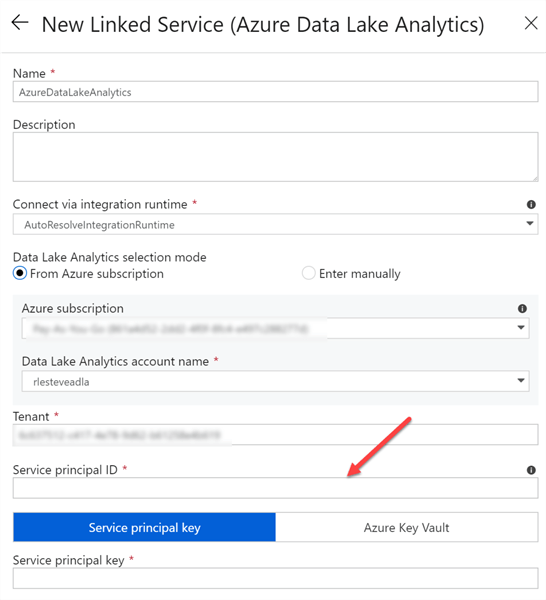

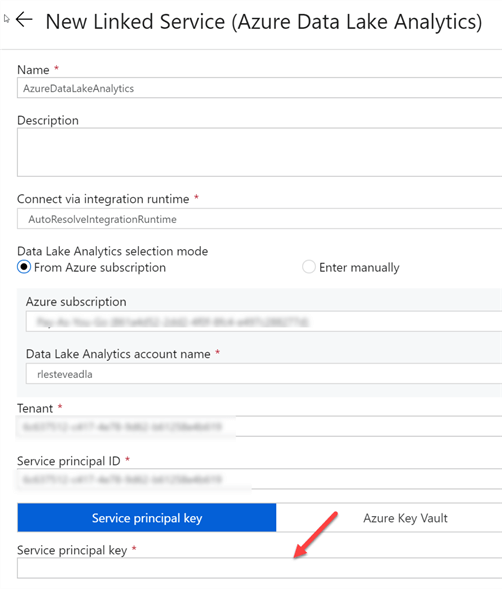

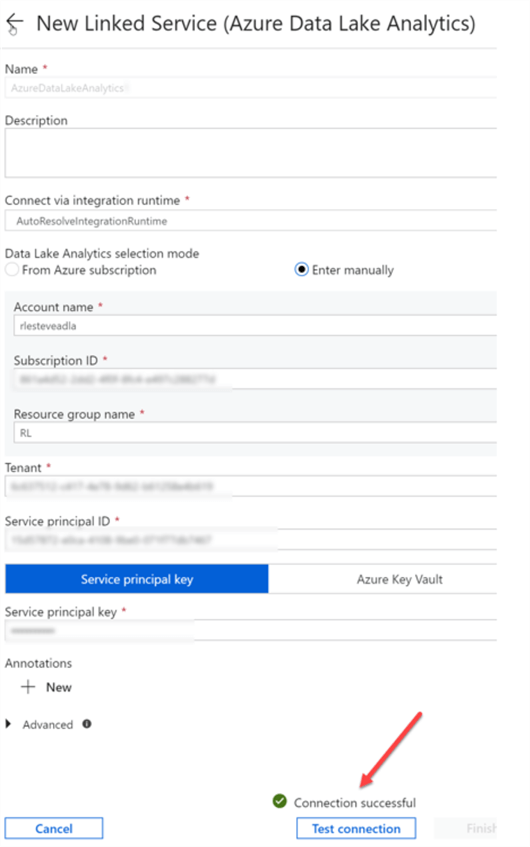

I'1000 now ready to begin creating my Azure Data Analytics Linked Service past inbound the post-obit details:

Note that I I'm existence asked for a Service Principal ID and Service Principal Primal. Since I don't accept these credentials yet, I will need to work on getting them in the following steps so that I tin can complete the configuration of the linked service to allow Azure Data Factory to execute my Data Lake U-SQL Scripts.

To acquire the Service Primary ID and Key, I volition need a Service Main which is like to a proxy account which allows Azure services to connect to other services.

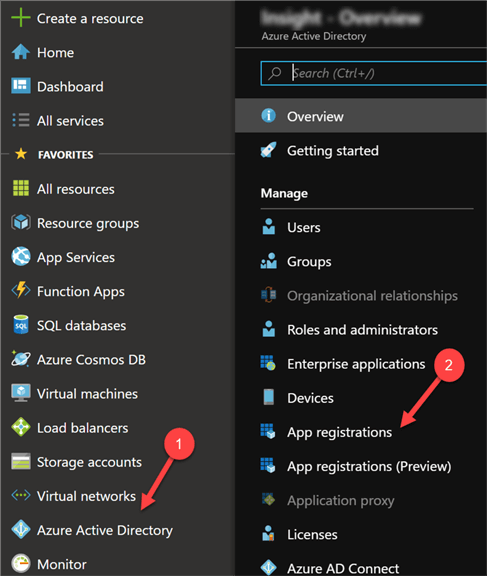

I'll start past navigate to my Azure Active Directory in the Azure Portal and and then click 'App Registrations' as seen in the image beneath.

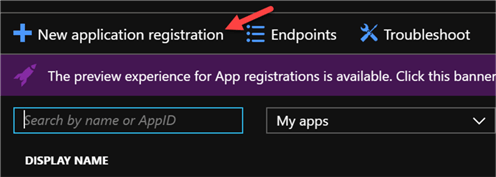

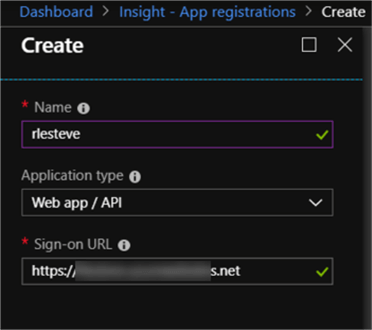

I'll then click 'New Application Registration' to create a new Service App.

Upon doing so, a new blade will reveal itself, allowing me to name my app and its Sign-on URL. When I first attempted this procedure, I created a new Web app in the portal. Yet, later additional discovery I realized I could enter a placeholder URL to reach the aforementioned results.

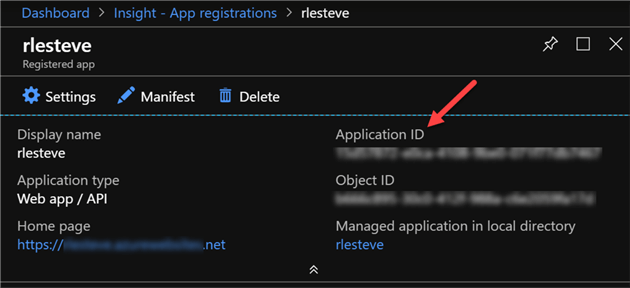

After entering my app details, I'll click the Create button to move on to the adjacent footstep. In one case my app is created, I will exist able to see the Registered app details. The Application ID is the Service Principal ID, so I volition copy this ID and paste it in my New Linked Service Dialog box in Data Factory.

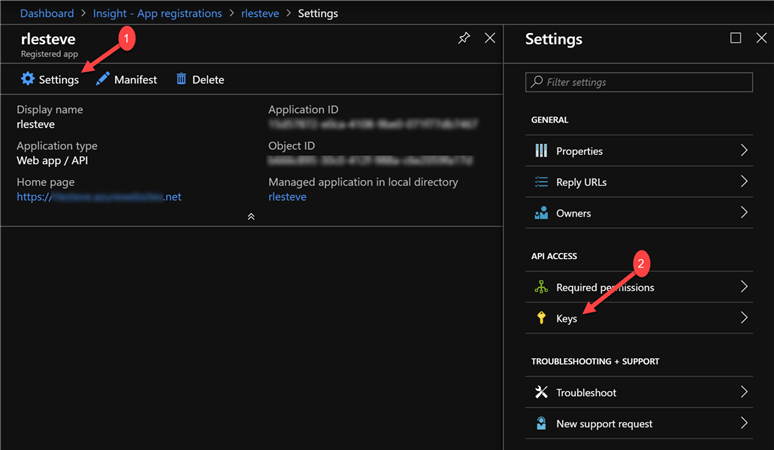

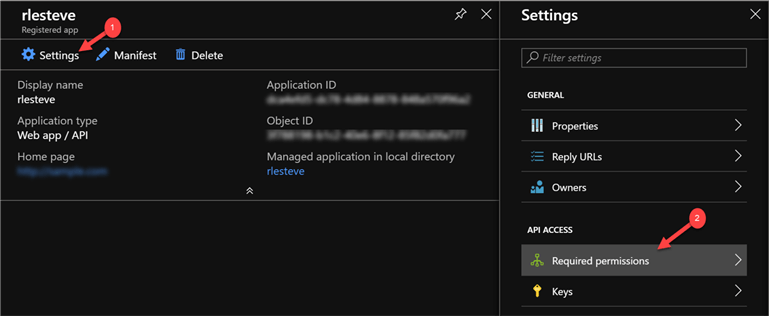

Next, I volition demand to generate my Service Principal Fundamental to complete the New Linked Service registration. To do this, I will click settings on my Registered app and and so click Keys.

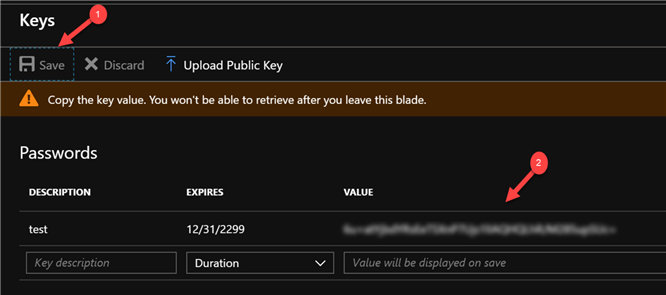

When the Keys blade opens, I will enter a new primal description, the expiration Elapsing, and then click Save. Every bit I click save, the new Key Value will be generated and visible. Annotation the alarm which reminds me that the key value volition no longer be visible after I leave this black then I volition Copy and salve the Key Value.

Once once more, I volition navigate back to my New Linked Service registration in Data Factory and Enter the Fundamental Value in the Service Master Key department of the Linked Service:

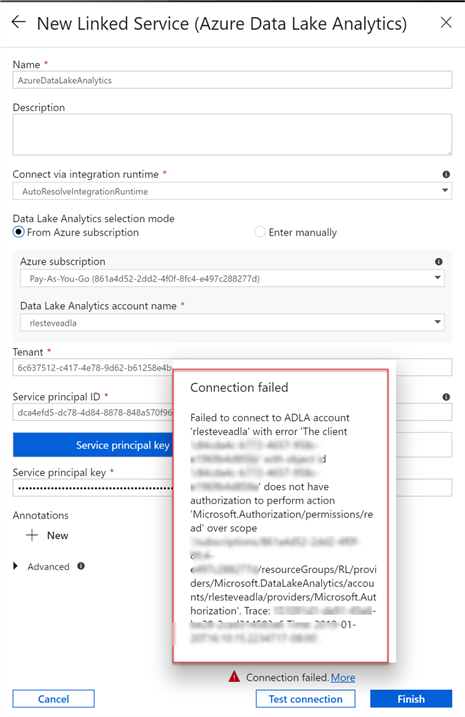

Now that I have entered my Service Principal ID and Service Principal Cardinal, I would call back that I should be able to successfully complete the process of adding a New Data Lake Analytics linked service. Unfortunately, there are a few more permissions that volition demand to be granted to complete this configuration since the connection still failed at this point.

Boosted Permissions

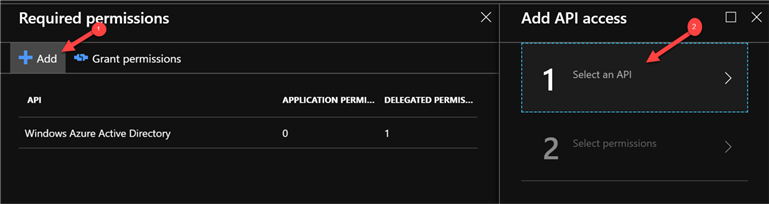

The first step of these boosted permissions will exist to navigate dorsum to my Registered app and click Settings > Required Permissions.

Side by side click Add together to begin Calculation an API.

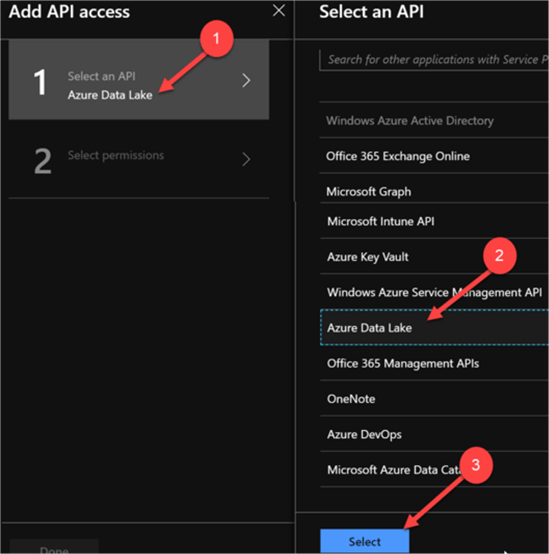

I will then select Azure Data Lake equally my API and click select.

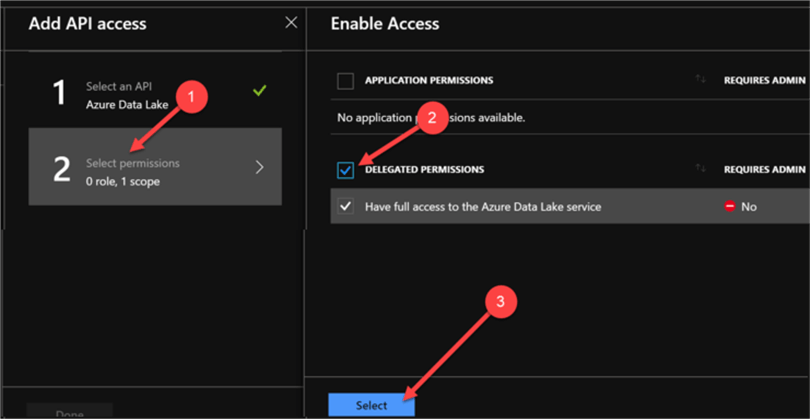

I'll then movement on to the next stride of selecting permissions and will click Delegate permissions so click select.

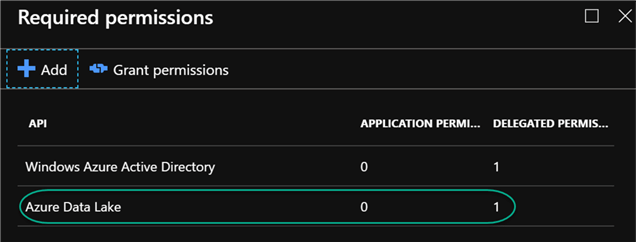

When the Required permissions box is displayed, I notice that Azure Data Lake is set as a Delegated Permission.

This completes our work with the Registered App.

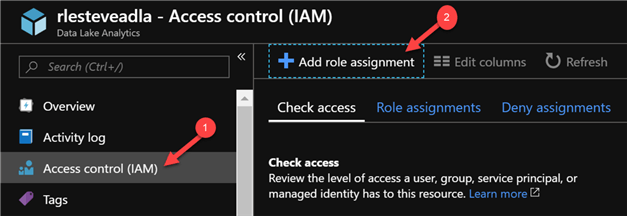

Side by side, I will demand to do a few more final configurations in my Data Lake Analytics Business relationship to give my registered app permissions to my data lake account. I'll navigate to my Data Lake Analytics Account and then click Access Control (IAM) and click Add role consignment.

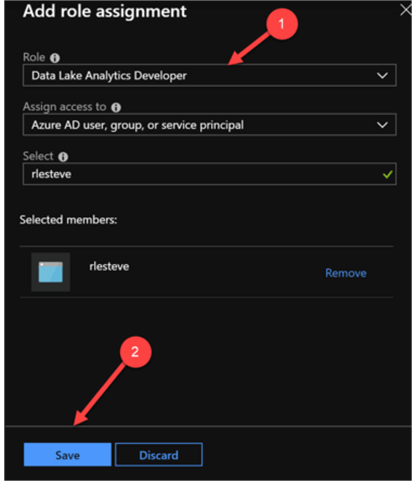

In the Add together role consignment blade, I will select Data Lake Analytics Developer as my role and I will select my registered app and and then click save.

Now that the Azure resource level IAM Admission Control is consummate, I tin proceed to create my Data Lake level permissions.

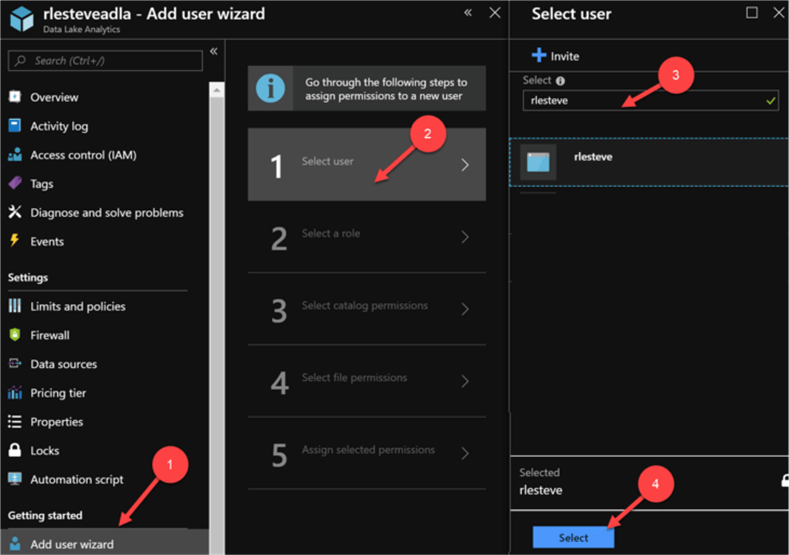

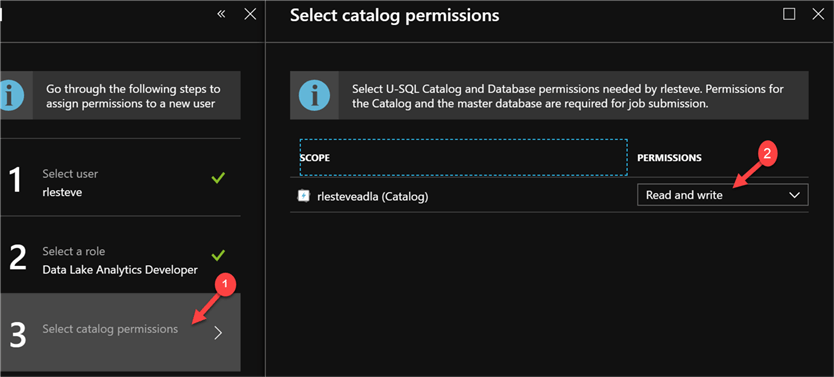

To practice this, I will click Add User Wizard and then select my registered app name then click select.

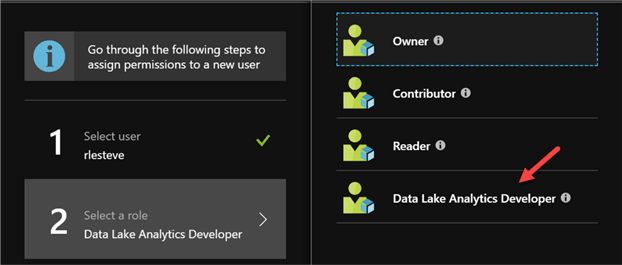

Adjacent, I will continue to select Information Lake Analytics Developer every bit the role.

As I motility on to the catalog permissions, I will grant Read and write permissions to the Data Lake Analytics catalog and and then click Select.

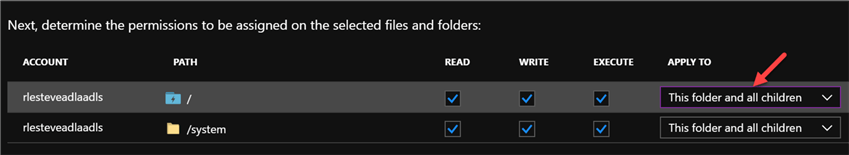

Side by side, I will be asked to determine the permissions to be assigned on the selected files and folders. I will click 'This binder and all children' and click select.

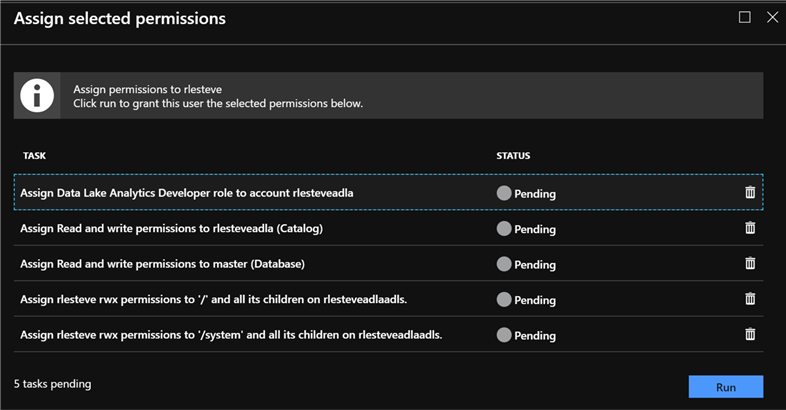

I will and then be presented with the Assign Permissions task list base on my previous selections that will have a status of 'Pending'.

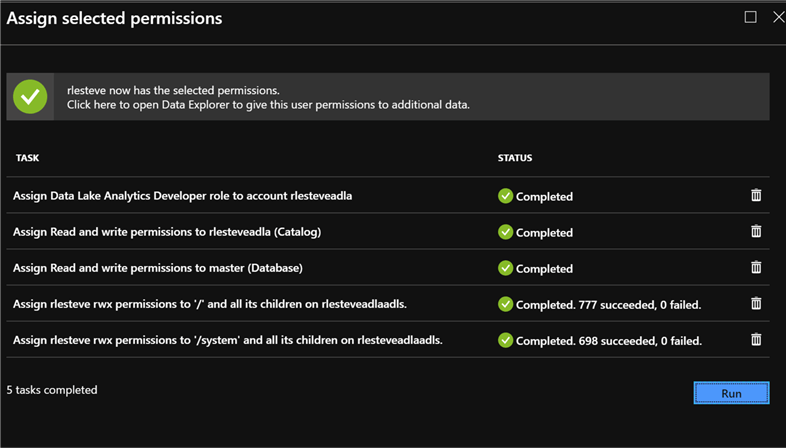

When I click Run, the jobs will brainstorm running as the tasks complete.

As presently as the chore completes, I will click Washed to finally complete to Service Principal registration process. Now I can return to my Data Factory and re-exam my connection to my New Azure Information Lake Analytics Linked Service. Note that since my Data Factory Linked Service had been open for so long, it will about probably need to be re-created to refresh the new setting and permissions.

Later on I re-enter my save Service Principal ID and Central, I'll click Test Connectedness one concluding time, and I finally run into a 'Connectedness Successful' condition. I'll then click finish and at present I am gear up to begin transforming information with U-SQL using Azure Data Manufacturing plant.

Next Steps

- In this commodity, I walked through a step-by-stride example on how to create an Azure Data Lake Linked Service in Azure Information Manufactory v2.

- Every bit a next step, my commodity on Using Azure Data Manufactory to Transform Information with U-SQL volition go into detail on how to now utilize this new Azure Data Analytics Linked Service to create pipelines which volition use Linked Services and Data Sets to process, transform and schedule data with U-SQL and Data Lake Analytics.

Related Articles

Popular Articles

Most the author

Ron L'Esteve is a seasoned Data Architect who holds an MBA and MSF. Ron has over 15 years of consulting experience with Microsoft Business organization Intelligence, data engineering, emerging cloud and big information technologies.

Ron L'Esteve is a seasoned Data Architect who holds an MBA and MSF. Ron has over 15 years of consulting experience with Microsoft Business organization Intelligence, data engineering, emerging cloud and big information technologies.

View all my tips

Article Last Updated: 2019-03-18

Source: https://www.mssqltips.com/sqlservertip/5930/create-azure-data-lake-linked-service-using-azure-data-factory/

Posted by: mcbridewastle.blogspot.com

0 Response to "How To Create Adla Linked Service In Adf V2"

Post a Comment